The AI Act is a comprehensive legislative framework designed to govern the development, deployment and use of artificial intelligence (AI) systems within the European Union, with the aim of ensuring safety, transparency and respect for fundamental rights. On 13 March 2024, the European Parliament adopted the consolidated AI Act. The next step before the Act can be enacted and officially published in The Official Journal of the European Union involves its formal adoption by the EU Council. The AI Act also prohibits the use of certain AI systems and under the current timeline, this ban is set to become applicable as early as by the end of 2024.

Here’s what you need to know about the AI Act.

What steps do organisations need to take now?

In order to attain the required AI Act regulatory compliance, we advise you to take the following steps:

- Map your AI systems – identify and map all AI systems developed or used in your organisation. It is recommended to develop a central record of all AI systems, with allocation of responsibility over each AI system.

- Conduct an impact and risk assessment – identify the risks of these AI systems and categorise each AI system based on the risks and categorisation in the AI Act. Based on your activities, determine your role in the AI system supply chain. This will help you understand the impact of the AI Act on your organisation.

- Implement a governance system – develop an AI governance system and strategy. Put in place specific roles and responsibilities for overseeing compliance. Create internal documentation to demonstrate compliance.

- Continue to monitor AI use and regulatory developments – continue monitoring AI use in your organisation. Keep an eye on the regulatory requirements, as further guidance on the AI Act will become available.

Scope and applicability

Definition of an AI system

The AI Act foresees a flexible and comprehensive definition of an “AI system”, which envisages possible future technological advancements, but that is also easily comprehensible, emphasising the general autonomy of the used system.

An “AI system” is accordingly defined as “a machine-based system designed to operate with varying levels of autonomy, that may exhibit adaptiveness after deployment and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations or decisions that can influence physical or virtual environments”.

Extraterritorial effect

Once in force, the AI Act will apply not only within the EU, but also to non-EU organisations that place AI systems or models on the EU market, or put AI systems into service within the EU, as well as where the output of an AI system located outside the EU is used within its borders. Thus, the AI Act will likely have a similar effect on worldwide AI regulation, similarly to how the GDPR has had an impact on data protection developments.

Actors within scope

The AI Act foresees various obligations on the different actors included in AI systems’ supply chain. Most notably, the AI Act foresees requirements for:

- deployers (users) who use AI systems in the course of a non-personal professional activity

- providers, defined as actors who develop AI systems and place them on the market or put into service under their name or trademark, regardless of whether for payment or free of charge

- importers and distributors who make AI systems available or place them on the EU market

- product manufacturers who manufacture high-risk AI systems covered by EU harmonised legislation (Annex I) and deploy these systems in the EU under the name or trade mark of the manufacturer

- authorised representatives of AI systems who are established in the EU and have a written mandate from a provider of an AI system to carry out their obligations

These are collectively referred to as operators under the AI Act.

Exclusions from applicability

There are several specific exclusions included that are relevant to the scope of the AI Act’s applicability. In particular, the AI Act will not apply to

- natural persons using AI systems for purely personal, non-professional activity

- areas outside the scope of EU law, particularly the competencies of the member states concerning national security

- AI systems used exclusively for military, defence or national security purposes

- public authorities in a third country or international organisations using AI systems under international cooperation or agreements for law enforcement and judicial cooperation with the EU or member states

- AI systems developed and used solely for scientific research and development

- any research, testing or development activity relating to AI systems before they are marketed or put into service. Testing in real-world conditions is still within the scope of the AI Act.

- AI systems released under free and open-source licences, unless they are high-risk or fall under specific prohibited categories

Risk-based approach

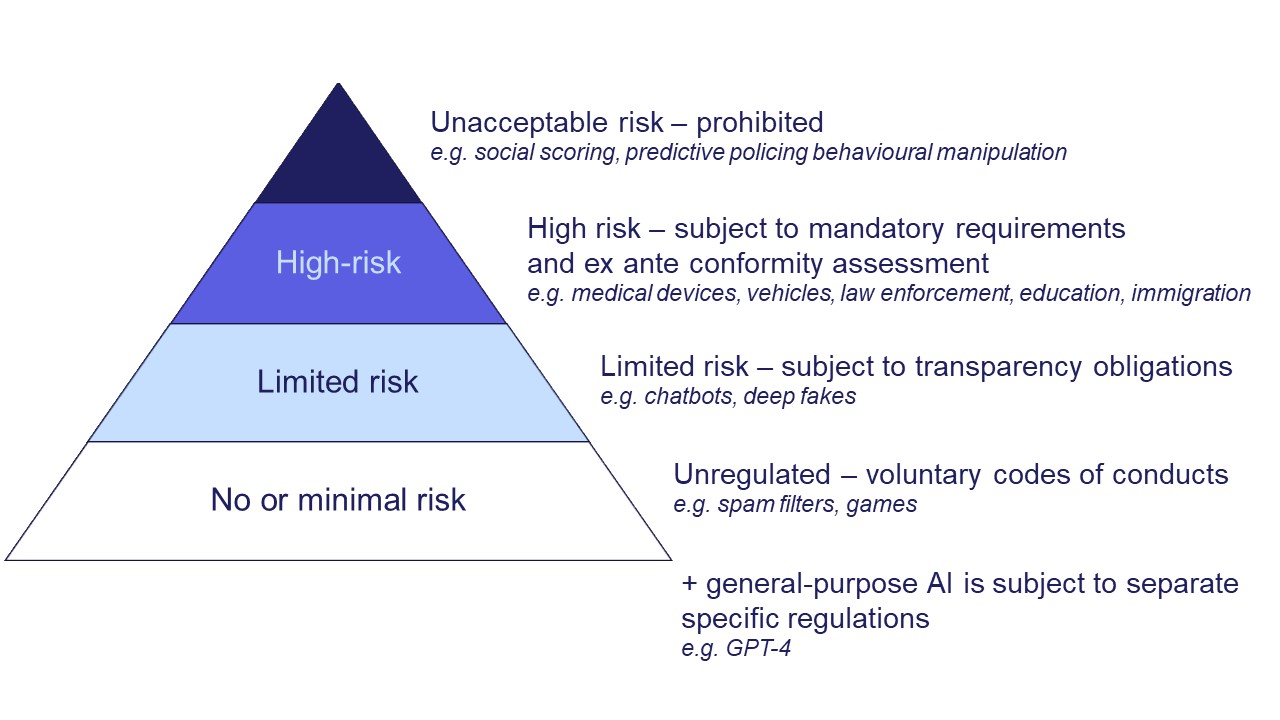

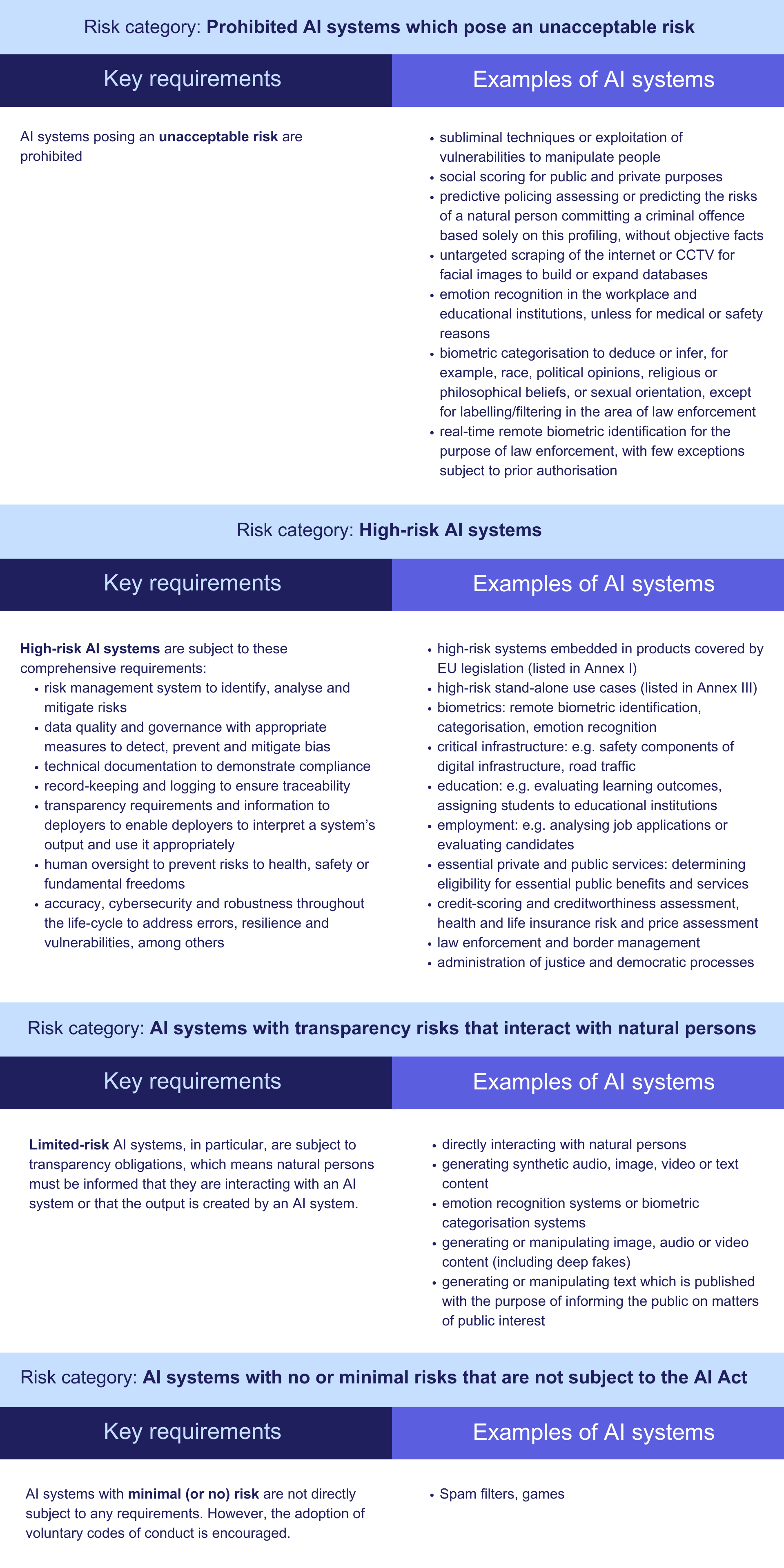

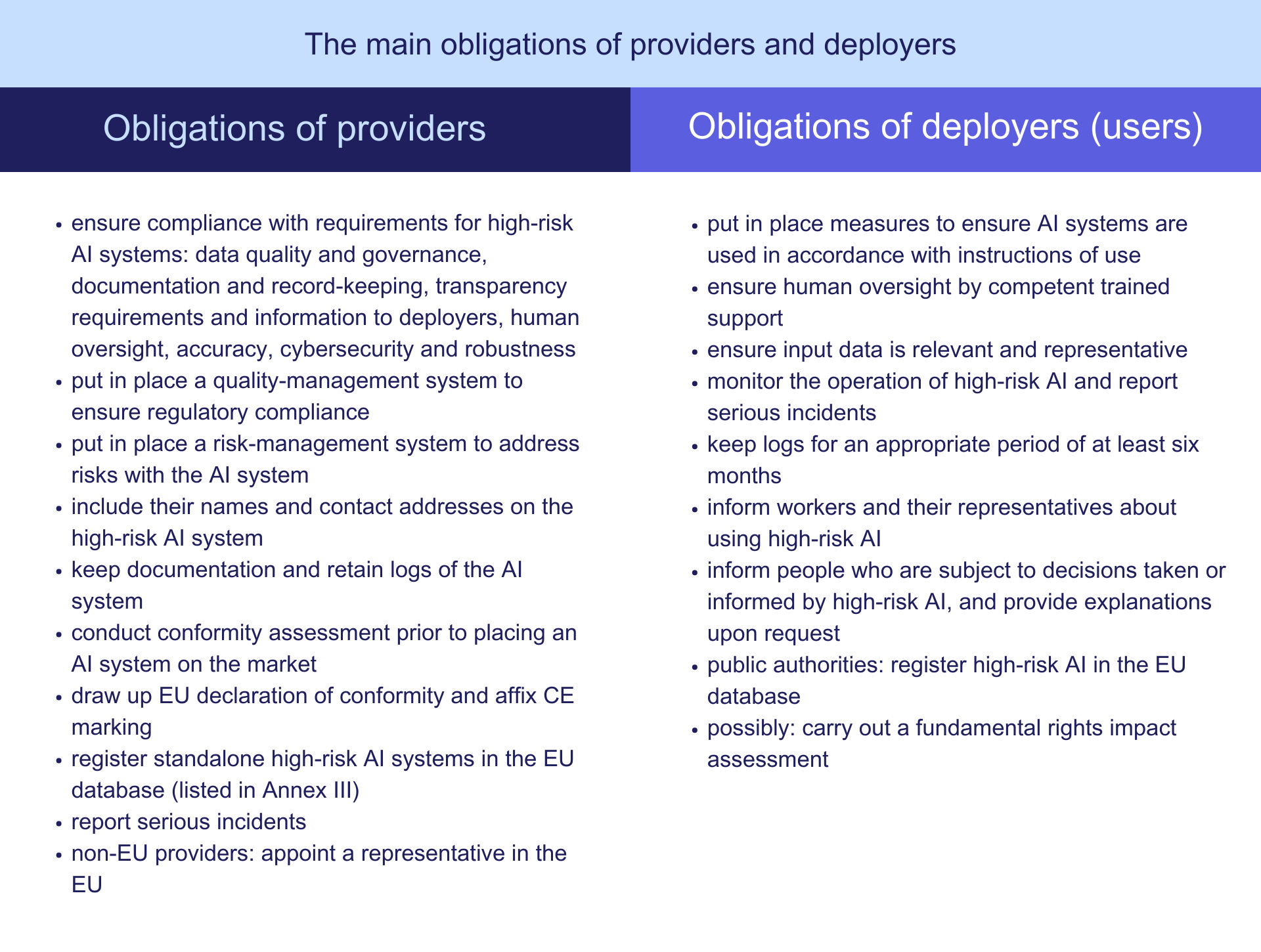

The AI Act is structured to follow a risk-based approach, by identifying different risk categories. The AI Act establishes conditions for the AI systems that fall within its scope on the basis of their potential risks and level of impact. Naturally, high-risk AI systems are subject to stricter and more extensive requirements by comparison to “standard” AI systems which pose less risk, as illustrated below pursuant to the relevant categories into which all AI systems have been divided:

Main obligations of operators

High-risk AI systems

The AI Act imposes operator-specific obligations on the operators of AI systems. The most onerous requirements are imposed on operators of high-risk AI systems, which will be subject to risk management procedures throughout the life-cycle of the AI system. These requirements relate to, among others, establishment of an adequate risk assessment system, implementation of data governance and management principles, record-keeping and logging requirements, and having technical documentation and appropriate human oversight measures in place.

Most companies will likely fall within the category of being either a provider or deployer of AI systems. The main obligations of providers and deployers are:

General Purpose AI (GPAI) Systems

As a separate matter, GPAI systems (models used for different purposes and which are already well-known, particularly such as large language models processing text, audios, videos, etc.) are subject to specific regulations. In this regard, the AI Act stipulates that with respect to GPAI models:

- there are information and document requirements, mainly for transparency for downstream operators

- introduce policy of respecting EU copyright laws

- draw up and make public summaries of content used for training

At the same time, providers of GPAI models which pose a systematic risk have additional obligations:

- state-of-the-art model evaluations, which include adversarial testing to identify and mitigate systemic risks

- risk assessment and mitigation at the EU level

- incident reporting and documentation

- adequate level of cybersecurity

Sanctions and enforcement timeline

Fines for non-compliance

The EU has proposed rather stringent enforcement measures to ensure compliance with the AI Act, including fines and non-monetary measures. The majority of violations are subject to a fine of up to EUR 15 million or 3% of the infringer’s total worldwide turnover in the preceding financial year, whichever is higher. With respect to prohibited AI practices, the fine may reach up to EUR 35 million or 7% of turnover. The supply of incorrect, incomplete or misleading information to the relevant authorities in response to a request can be subject to a fine of up to EUR 7.5 million or 1% of turnover.

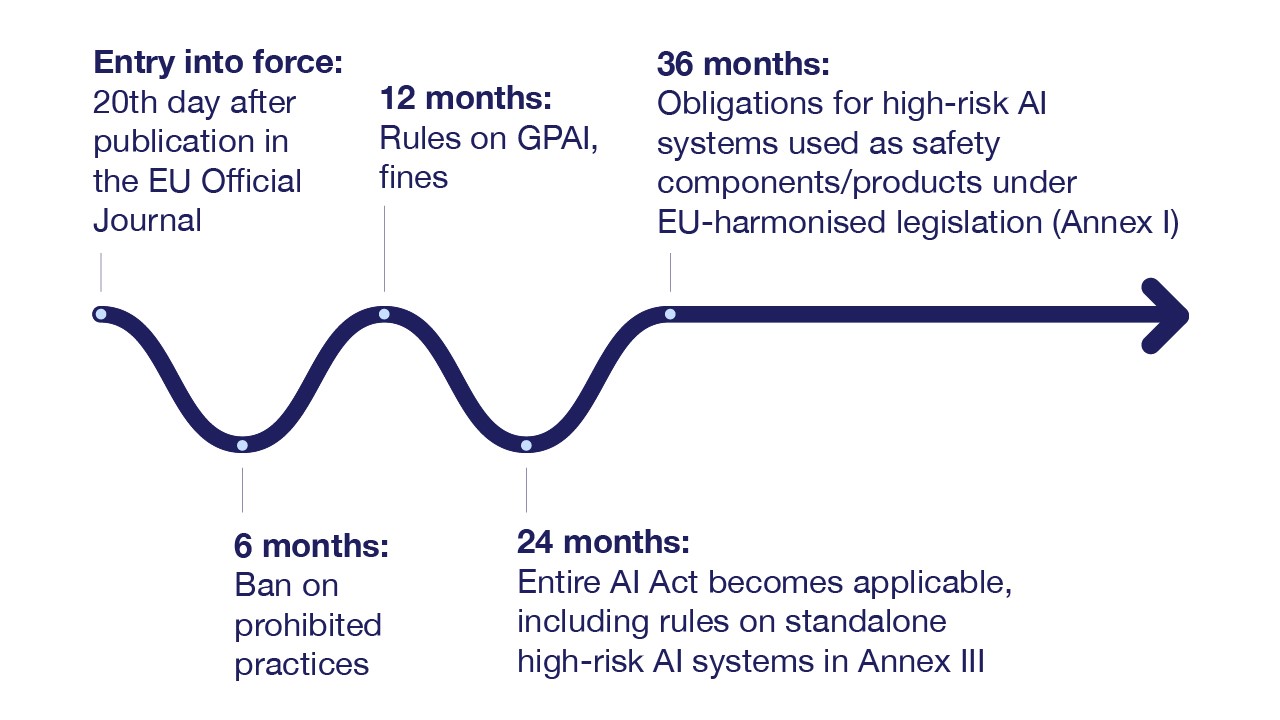

Enforcement timeline

Obligations under the AI Act will become applicable in relevant stages, reflecting the risk-based approach applicable to AI systems. Under the current timeline, it is expected that the prohibited use cases of AI will become applicable as early as by the end of 2024:

See also our webinar recording on “The AI Act in practice: what it means for your business”.

Our Technology, Media & Telecommunications team is at your disposal, should you need advice on any legal issues you are facing.

Subscribe here if you would like to receive newsletters and invitations to webinars and offline events.

Contact our experts:

Partner, Head of Technology & Data Protection

Senior Associate